Tableau Prep is new tool from Tableau which helps to help people transform, clean and massage their data for analysis quickly. With simple drag and drop features to simplify the complicated tasks of joins, unions, pivots, aggregate or create calculate fields the data quickly.

Let’s quickly see how Tableau prep works and for that one needs to download Tableau prep and install it from here. For this exercise, we are going to use superstore data which can be download from here.

Objective of this exercise is to get output something like this:

Final prep file will look like this:

Open Tableau Prep after installing and you can see below screen:

Step 1: Connect to Data source:

Tableau Prep provides flexibility to connect more than 25 data sources right from csv to Amazon redshift to greenplum database.

Click on Connections –> Select Microsoft Excel –> select the file downloaded earlier from path

Once data file is loaded into Prep, you will get data like below. Here, on left hand side we see various sheets in the excel file (source) and on right side we get to see plain white area where we can drag one or multiple source files or tables. Next is we will drag orders into white area and we will see bottom half of right side list of columns in the file.

Here we can select the columns required for further processing or deselect the unwanted fields. For the purpose of this post, I selected Customer id, order date, product id, category, sales field from the input section:

Step 2: Finding the customer level first purchase date with its sales, number of distinct product ids and categories

Tableau prep gives various options to perform next step in data preparation:

Select aggregate option, then drag customer id into group fields and order date into Aggregated fields. Change the aggregation level to minimum for order date (shown in below image)

Add another aggregate from the source orders, then drag customer id, order date into group fields while drag sales, product id and category into aggregation levels. Ensure Aggregation level for sales is sum and for product id & category should be distinct counts. It will look something like below screenshot.

We can directly set the type aggregation on field by clicking on field and selecting the type as shown in above image.

Now, its time to use Join operation to join both the aggregate results to get first purchase date, sales, count of products and categories:

Add Join from aggregate 2 then drag Aggregate 1 into join as shown in image:

Then add the join condition on customer id and order date as shown below:

Finally, we have all required fields but we also got duplicate fields for customer id and order date. We will remove this field using cleaning step:

Along with this we will rearrange the data and rename fields for better clarification (order date -> 1st purchase date, sales -> 1st purchase sales, Product id -> 1st purchase products and category -> 1st purchase categories)

We can anytime look at the changes made on the left side under changes section.

With this we have customer ids with their first purchase date, sales, number of distinct products and distinct categories

Step 3: Customer ids with second purchase date, sales, number of distinct products and distinct categories:

Add New join operation on Aggregate 1 and Aggregate 2 with slightly different join criteria where matching customer id but order dates are not equal. (highlighted in Yellow in below image)

Add step to remove duplicate order date-1 and customer id-1 field:

Add Aggregate (Aggregate 3) to find the minimum order date from this data (as we had removed first order date when we applied second join (Join 2))

Add Join (Join 3) from aggregate 3 and apply join with Aggregate 2 on customer id and order date as shown in below images:

Now we have got desired output along with duplicate fields of customer id-1 and order date-1. We will remove this fields using add step operation for cleaning of our dataset.

Along with this we will rearrange the data and rename fields for better clarification (order date -> 2nd purchase date, sales -> 2nd purchase sales, Product id -> 2nd purchase products and category -> 2nd purchase categories)

Now we have both the dataset ready with required information.

Step 4: Combine the dataset (output from clean 1 & clean 3) using join on Customer id

Here we have duplicate field of customer id which we will remove using step operation and rearrange the data to see proper arrangement:

Final step is to export the data into either .hyper or CSV or .tde file using output operation as shown below:

Click on Run now and generate the file. Now, this file can be directly imported into Tableau for data visualisation:

Overall, I really liked the easy nature of Tableau Prep which allows non-technical users to create their own data flow or kind-off ETL to generate the output dataset with necessary transformations.

The final version of Tableau prep file is available Here:

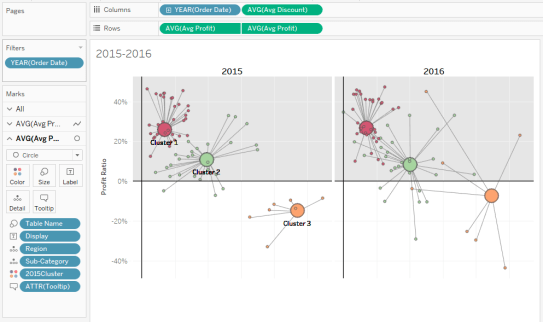

From the output file generated from above process, I had designed the following Tableau data visualisation:

Happy Visualisation!!!

Thanks for visiting blog. Please do let me know your feedback and if any particular topic you would like me to write on.

Do subscribe to Tabvizexplorer.com to keep receive regular updates.